July 14, 2010Category theory crash course for the working Haskell programmer.

A frequent question that comes up when discussing the dual data structures—most frequently comonad—is “What does the co- mean?” The snippy category theory answer is: “Because you flip the arrows around.” This is confusing, because if you look at one variant of the monad and comonad typeclasses:

class Monad m where

(>>=) :: m a -> (a -> m b) -> m b

return :: a -> m a

class Comonad w where

(=>>) :: w a -> (w a -> b) -> w b

extract :: w a -> a

there are a lot of “arrows”, and only a few of them flipped (specifically, the arrow inside the second argument of the >>= and =>> functions, and the arrow in return/extract). This article will make precise what it means to “flip arrows” and use the “dual category”, even if you don’t know a lick of category theory.

Notation. There will be several diagrams in this article. You can read any node (aka object) as a Haskell type, and any solid arrow (aka morphism) as a Haskell function between those two types. (There will be arrows of different colors to distinguish concepts.) So if I have f :: Int -> Bool, I will draw that as:

Functors. The Functor typeclass is familiar to the working Haskell programmer:

class Functor t where

fmap :: (a -> b) -> (t a -> t b)

While the typeclass seems to imply that there is only one part to an instance of Functor, the implementation of fmap, there is another, almost trivial part: t is now a type function of kind * -> *: it takes a type (a) and outputs a new type (unimaginatively named t a). So we can represent it by this diagram:

The arrows are colored differently for a good reason: they are indicating completely different things (and just happen to be on the same diagram). While the red arrow represents a concrete function a -> b (the first argument of fmap), the dashed blue arrow does not claim that a function a -> t a exists: it’s simply indicating how the functor maps from one type to another. It could be a type with no legal values! We could also posit the existence of a function of that type; in that case, we would have a pointed functor:

class Functor f => Pointed f where

pure :: a -> f a -- aka return

But for our purposes, such a function (or is it?) won’t be interesting until we get to monads.

You may have heard of the Functor law, an equality that all Functors should satisfy. Here it is in textual form:

fmap (g . f) == fmap g . fmap f

and here it is in pictorial form:

One might imagine the diagram as a giant if..then statement: if f, g and g . f exist, then fmap f, fmap g and fmap (g . f) exist (just apply fmap to them!), and they happen to compose in the same way.

Now, it so happens that if we have f :: a -> b and g :: b -> c, g . f is also guaranteed to exist, so we didn’t really need to draw the arrow either. This is such an implicit notion of function composition, so we will take a moment and ask: why is that?

It turns out that when I draw a diagram of red arrows, I’m drawing what mathematicians call a category with objects and arrows. The last few diagrams have been drawn in what is called the category Hask, which has objects as Haskell types and arrows as Haskell functions. The definition of a category builds in arrow composition and identities:

class Category (~>) where

(.) :: (b ~> c) -> (a ~> b) -> (a ~> c)

id :: a ~> a

(you can mentally substitute ~> with -> for Hask) and there are also laws that make arrow composition associative. Most relevantly, the categorical arrows are precisely the arrows you flip when you talk about a dual category.

“Great!” you say, “Does that mean we’re done?” Unfortunately, not quite yet. It is true that the comonad is a monad for an opposite (or dual) category, it is not the category Hask. (This is not the category you are looking for!) Still, we’ve spent all this time getting comfortable drawing diagrams in Hask, and it would be a shame to not put this to good use. Thus, we are going to see an example of the dual category of Hask.

Contravariant functors. You may have heard fmap described as a function that “lifts” functions in to a functorial context: this “functorial context” is actually just another category. (To actually mathematically show this, we’d need to show that the functor laws are sufficient to preserve the category laws.) For normal functors, this category is just Hask (actually a subcategory of it, since only types t _ qualify as objects). For contravariant functors, this category is Hask^op.

Any function f :: a -> b in Hask becomes a function contramap f :: f b -> f a in a contravariant functor:

class ContraFunctor t where

contramap :: (a -> b) -> t b -> t a

Here is the corresponding diagram:

Notice that we’ve partitioned the diagram into two sections: one in Hask, and one in Hask^op, and notice how the function arrows (red) flip going from one category to the other, while the functor arrows (blue) have not flipped. t a is still a contravariant functor value.

You might be scratching your head and wondering: is there any instance of contramap that we could actually use? In fact, there is a very simple one that follows directly from our diagram:

newtype ContraF a b = ContraF (b -> a)

instance ContraFunctor (ContraF a) where

contramap g (ContraF f) = ContraF (f . g)

Understanding this instance is not too important for the rest of this article, but interested readers should compare it to the functor on normal functions. Beyond the newtype wrapping and unwrapping, there is only one change.

Natural transformations. I’m going to give away the punchline: in the case of comonads, the arrows you are looking for are natural transformations. What are natural transformations? What kind of category has natural transformations as arrows? In Haskell, natural transformations are roughly polymorphic functions: they’re mappings defined on functors. We’ll notate them in gray, and also introduce some new notation, since we will be handling multiple Functors: subscripts indicate types: fmap_t is fmap :: (a -> b) -> t a -> t b) and η_a is η :: t a -> s a.

Let’s review the three types of arrows flying around. The red arrows are functions, they are morphisms in the category Hask. The blue arrows are indicate a functor mapping between types; they also operate on functions to produce more functions (also in the category Hask: this makes them endofunctors). The gray arrows are also functions, so they can be viewed as morphisms in the category Hask, but sets of gray arrows across all types (objects) in Hask from one functor to another collectively form a natural transformation (two components of a natural transformation are depicted in the diagram). A single blue arrow is not a functor; a single gray arrow is not natural transformations. Rather, appropriately typed collections of them are functors and natural transformations.

Because f seems to be cluttering up the diagram, we could easily omit it:

Monad. Here is the typeclass, to refresh your memory:

class Monad m where

(>>=) :: m a -> (a -> m b) -> m b

return :: a -> m a

You may have heard of an alternate way to define the Monad typeclass:

class Functor m => Monad m where

join :: m (m a) -> m a

return :: a -> m a

where:

m >>= f = join (fmap f m)

join m = m >>= id

join is far more rooted in category theory (indeed, it defines the natural transformation that is the infamous binary operation that makes monads monoids), and you should convince yourself that either join or >>= will get the job done.

Suppose that we know nothing about what monad we’re dealing with, only that it is a monad. What sort of types might we see?

Curiously enough, I’ve colored the arrows here as natural transformations, not red, as we have been doing for undistinguished functions in Hask. But where are the functors? m a is trivial: any Monad is also a valid instance of functor. a seems like a plain value, but it can also be treated as Identity a, that is, a inside the identity functor:

newtype Identity a = Identity a

instance Functor Identity where

fmap f (Identity x) = Identity (f x)

and Monad m => m (m a) is just a functor two skins deep:

fmap2 f m = fmap (fmap f) m

or, in point-free style:

fmap2 = fmap . fmap

(Each fmap embeds the function one functor deeper.) We can precisely notate the fact that these functors are composed with something like (cribbed from sigfpe):

type (f :<*> g) x = f (g x)

in which case m :<*> m is a functor.

While those diagrams stem directly from the definition of a monad, there are also important monad laws, which we can also draw diagrams for. I’ll draw just the monad identity laws with f:

return_a indicates return :: a -> m a, and join_a indicates join :: m (m a) -> m a. Here are the rest with f removed:

You can interpret light blue text as “fresh”—it is the new “layer” created (or compressed) by the natural transformation. The first diagram indicates the identity law (traditionally return x >>= f == f x and f >>= return == f); the second indicates associativity law (traditionally (m >>= f) >>= g == m >>= (\x -> f x >>= g)). The diagrams are equivalent to this code:

join . return == id == join . fmap return

join . join == join . fmap join

Comonads. Monads inhabit the category of endofunctors Hask -> Hask. The category of endofunctors has endofunctors as objects and (no surprise) natural transformations as arrows. So when we make a comonad, we flip the natural transformations. There are two of them: join and return.

Here is the type class:

class Functor w => Comonad w where

cojoin :: w a -> w (w a)

coreturn :: w a -> a

Which have been renamed duplicate and extract respectively.

We can also flip the natural transformation arrows to get our Comonad laws:

extract . duplicate == id == duplicate . extract

duplicate . duplicate == fmap duplicate . duplicate

Next time. While it is perfectly reasonable to derive <<= from cojoin and coreturn, some readers may feel cheated, for I have never actually discussed the functions from monad that Haskell programmers deal with on a regular basis: I just changed around the definitions until it was obvious what arrows to flip. So some time in the future, I hope to draw some diagrams for Kleisli arrows and show what that is about: in particular, why >=> and <=< are called Kleisli composition.

Apology. It being three in the morning, I’ve managed to omit all of the formal definitions and proofs! I am a very bad mathematician for doing so. Hopefully, after reading this, you will go to the Wikipedia articles on each of these topics and find their descriptions penetrable!

Postscript. You might be interested in this follow-up post about duality in simpler settings than monads/comonads.

July 12, 2010Subtitle: Massively multithreaded processors make your undergraduate CS education relevant again.

Quicksort. Divide and conquer. Search trees. These and other algorithms form the basis for a classic undergraduate algorithms class, where the big ideas of algorithm design are laid bare for all to see, and the performance model is one instruction, one time unit. “One instruction, one time unit? How quaint!” proclaim the cache-oblivious algorithm researchers and real world engineers. They know that the traditional curriculum, while not wrong, is quite misleading. It’s simply not enough to look at some theoretical computing machine: the next-generation of high performance algorithms need to be in tune with the hardware they run on. They couldn’t be more right.

Last Friday, Dr. John Feo gave a Galois Tech Talk entitled Requirements and Performance of Data Intensive, Irregular Applications (slides 1). However, Feo also brought in some slides from another deck which talked more generally about the Center for Adaptive Supercomputing Software (slides 2). The resulting presentation was a blend of the principles of massively multithreaded processor architectures—specifically the Cray XMT —and practical engineering problems encountered when writing software for such machines. Since I can’t resist putting spin on a good presentation, the title of these notes comes from a conversation I had with Feo after the tech talk; I don’t mean to demean those doing research on traditional processor, just to suggest that there is another approach that doesn’t receive as much attention as Feo thinks it should. For those of you who like puzzles, there will also be a “Why does this deadlock?” question at the end of this post.

Graphs not grids. John Feo started off by distinguishing between problems in science and problems in informatics. Scientific problems frequently take the form of grids, slowly evolving systems that exhibit the principle of locality and involve only nearest neighbor communication inside the grid. These types of problem are tackled extremely well by cluster parallelization: planar grids are obvious to partition, and nearest neighbor communication means that the majority of any computation will be local to the node containing the partition. Locality also means that, with a little care, these algorithms can play nicely with the CPU cache: for cache-oblivious algorithms, this just means partitioning the problem until it fits on-board.

Data informatics, however, sees quite different datasets. Consider the friends graph on Facebook, or the interlinking pages of the web, or the power grid of your country. These are not grids (not even the power grid): they are graphs. And unlike a quantum chromodynamic simulation, these graphs are dynamic, constantly being changed by many autonomous agents, and they can present some unique problems for traditional processors and parallelization.

Difficult graphs. There are several types of graphs that are particularly hard to run algorithms on. Unfortunately, they also tend to show up frequently in real world datasets.

Low diameter (aka “small world”) graphs are graphs in which the degree of separation between any two nodes is very low. The work necessary on these graphs explodes; any algorithm that looks at the neighbors of a node will quickly find itself having to operate on the entire graph at once. Say good bye to memory locality! The tight coupling also makes the graph difficult to partition, which is the classic way to parallelize a computation on a graph.

Scale-free graphs are graphs in which a small number of nodes have an exponentially large number of neighbors, and a large number of nodes have a small number of neighbors. These graphs are also difficult to partition and result in highly asymmetric workloads: the few nodes with large amounts neighbors tend to attract the bulk of the work.

There are also properties of graphs that can make computation more difficult. Non-planar graphs are generally harder to partition; dynamic graphs have concurrent actors inserting and deleting nodes and edges; weighted graphs can have pathological weight distributions; and finally graphs with typed edges prevent you from reducing a graph operation into a sparse matrix operation.

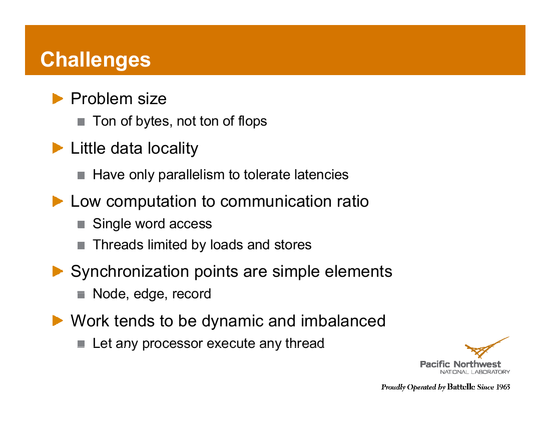

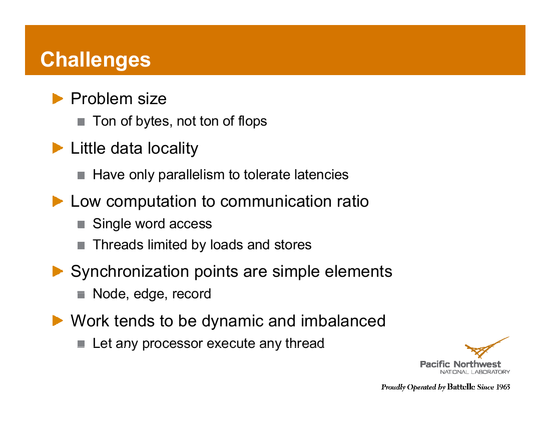

This slide from Feo sums up the immediate effects of these types of graphs nicely.

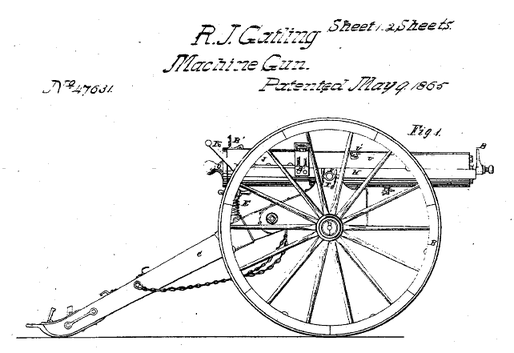

Multithreaded processors: gatling guns of the computing world. The gatling gun was one of the first well-known rapid-fire guns. Other guns simply increased their rate of fire, but quickly found that their gun barrels overheated if they attempted to fire too quickly. The gatling gun used multiple barrels, each of which individually fired at a slower rate, but when rotated in succession allowed a continuous stream of bullets to be fired while allowing the barrels not in use to cool off.

The time it takes for a discharged barrel to cool off is similar to the latency of a memory access. Since memory accesses are expensive, traditional processors try to “use less bullets” and forgo memory accesses with on-processor caches. However, a massively multithreaded processor takes a different approach: instead of trying to eliminate the memory latency, it simply hides it by context switching away from a thread that requests memory, so that by the time it switches back, the access has been completed and the data available. No need to twiddle your thumbs while waiting for data; go do something else! On specialized hardware, the researches at PNNL have been able to get processor utilization upwards of 90%; on less specialized hardware, performance targets are a bit more modest—40% or so.

Implications. Because the massively multithreaded processor is hiding memory access latency, not trying to get rid of it, traditional constraints such as memory locality become unimportant. You don’t need data to be near your computation, you don’t need to balance work across processors (since it all goes into threads that cohabit), you don’t need to handle synchronization like a time bomb. What you learned in undergraduate computer science is relevant again! In Feo’s words:

- Adaptive and dynamic methods are okay,

- Graph algorithms and sparse methods are okay, and

- Recursion, dynamic programming, branch-and-bound, dataflow are okay!

Your hardware, then, will be tailored for graph-like computations. This includes a huge global address space to shove your graph into, extremely lightweight synchronization in the form of full/empty bits (Haskell users might recognize them as extremely similar to MVars; indeed, they come from the same lineage of dataflow languages) and hardware support for thread migration, to balance out workloads. It’s something of a holy hardware grail for functional languages!

The Cray XMT is one particular architecture that John Feo and his fellow researchers have been evaluating. It easily beats traditional processors when handling algorithms that exhibit poor locality of reference; however, it is slower when you give the traditional processor and algorithm with good locality of reference.

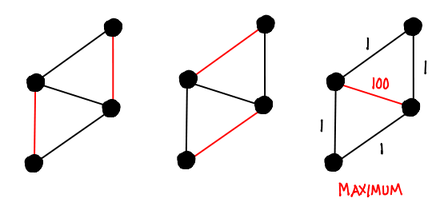

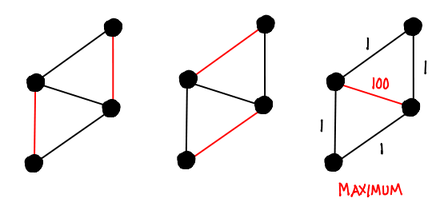

Maximum weight matching. There are many graph problems—shortest path, betweenness centrality, min/max flow, spanning trees, connected components, graph isomorphism, coloring, partitioning and equivalence, to name a few. The one Feo picked out to go into more detail about was maximum weight matching. A matching is a subset of edges such that no two edges are incident on the same vertex; so a maximum weight matching is a matching where the weights of the selected edges has been maximized (other cost functions can be considered, for example, on an unweighted graph you might want to maximize the number of edges).

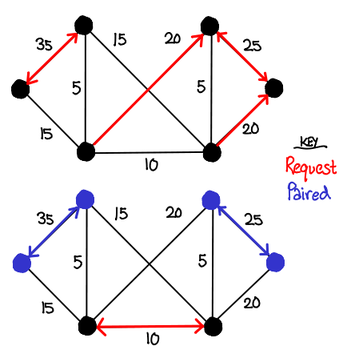

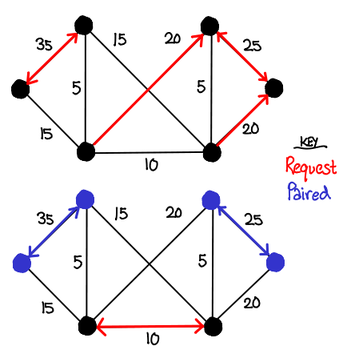

While there is a polynomial-time algorithm for finding maximum weight matchings, we can get an approximate answer more quickly with a greedy parallel algorithm called Hoepman’s algorithm. It is reminiscent of the stable marriage (Gale-Shapely) algorithm; the algorithm runs as follows: each node requests to be paired with the node across most expensive vertex local to it. If two nodes request each other, they are paired, and they reject all other pairing requests. If a node gets rejected, it tries the next highest vertex, and so on. Since a node will only accept one pairing request, edges in the pairing will never be incident on the same vertex.

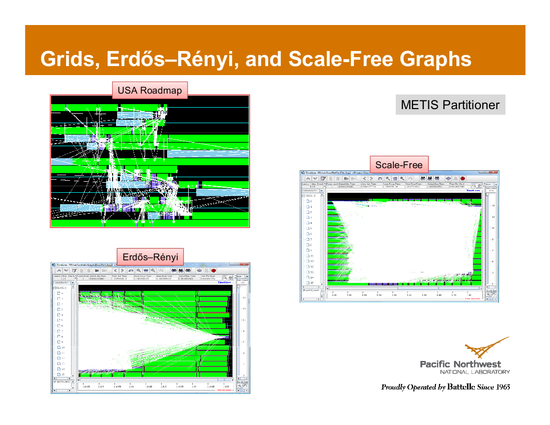

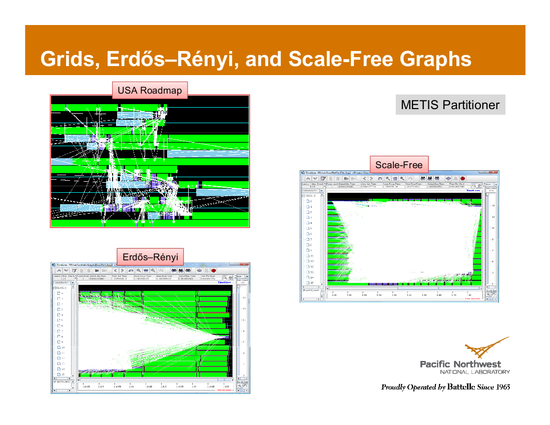

Hoepman’s algorithm relies on a theoretical machine that is able to allocate a processor per node. This doesn’t bode well for traditional cluster machines, so Halappanavar, Dobrian and Pothen proposed a parallel version that separates the graph into partitions which are given to processors, and uses queues to coordinate communicate across the partitions. Unfortunately, this approach performs extremely poorly in some cases. Feo has some visualizations of this phenomenon: the pictures below are visual depictions of processor cores, where green indicates the core is busy, and white lines indicate inter-processor communication. While the regular, planar graph of US roadways handles the problem nicely, both graphs generated by the Erdős–Rényi model and scale-free free graphs (one of the “tough” graph types we mentioned earlier) have inter-processor communication explode in sheets of white.

Machines like the Cray XMT, however, make it plausible to try to implement Hoepman’s original algorithm more closely. Give each node a thread, and implement the algorithm as described.

In order to implement signaling, we can use the full/empty bit primitive. Every edge has two full/empty bits, each endpoint owning one each. When a node attempts to pair with a vertex, it fills its own bit with 1, and then tries to read the other bit. While the bit is empty, the node’s thread blocks. If the other bit reads 1, the node is paired: fill all other bits the node owns with 0 and then terminate. If the other bit reads 0, try your next neighbor with the highest edge.

This approach doesn’t quite work, due to real-world constraints on the Cray XMT. In particular, for large graphs, it’s not possible for every thread to be run simultaneously; only a subset of nodes can be run at a time. If it just happens that every node is waiting on another node which is not being currently run, all the nodes block, and we have deadlock. In particular, the Cray XMT will not pre-empt a thread that is blocked by default, because the cost of context switching is so high. (You could turn pre-emption on, in which case this deadlock would go away, but at great cost to runtime. While the Cray does thread-level context switching every cycle, actually evicting the thread off its processor is quite expensive.)

The simple fix Feo applied was the following observation: as long as we schedule nodes closed to expensive edges, there will always be work to be done: in particular, the two nodes incident to the most expensive unpaired edge will always be able to pair. So sort the nodes in order of their most expensive vertex, and then run the algorithm. This resolved most of the deadlocks.

Ending notes. While massively multithreaded architectures are promising, there is a lot of work that still needs to be done both on the hardware side (making this technology available on commodity hardware, and not just the Cray XMT) as well as the software ecosystem (building new programming APIs to take advantage of the architecture.) Even further, the problems in this domain are so diverse that no one machine can truly attack all of them.

Nevertheless, Feo remains optimistic: if the problems are important enough, the machines will get built.

Puzzle. Even with the sorting modification, the implementation of maximum matching on the Cray XMT with preempting disabled still deadlocks on some large graphs. What graphs cause it to deadlock, and what is an easy way to fix the problem? (According to Feo, it took him three days to debug this deadlock! And no, turning on preemption is not the answer.) Solution will be posted on Friday.

(There might be answers in the comment section, so avert your eyes if you don’t want to be spoiled.)

Update. I’ve removed the link to the CACM article; while I thought it was timely for Reddit readers, it implied that Varnish’s designer was a “young algorithms designer corrupted by cache locality”, which is completely false. The expression was meant to express Feo’s general dissatisfaction with the general preoccupation of the algorithms community towards the complicated cache-aware/oblivious algorithms, and not directed at anyone in particular.

(THIS SPACE LEFT INTENTIONALLY BLANK)

July 9, 2010Update. While this blog post presents two true facts, it gets the causal relationship between the two facts wrong. Here is the correction.

Attention conservation notice. Don’t use unsafe in your FFI imports! We really mean it!

Consider the following example in from an old version of Haskellwiki’s FFI introduction:

{-# INCLUDE <math.h> #-}

{-# LANGUAGE ForeignFunctionInterface #-}

module FfiExample where

import Foreign.C -- get the C types

-- pure function

-- "unsafe" means it's slightly faster but can't callback to haskell

foreign import ccall unsafe "sin" c_sin :: CDouble -> CDouble

sin :: Double -> Double

sin d = realToFrac (c_sin (realToFrac d))

The comment blithely notes that the function can’t “callback to Haskell.” Someone first learning about the FFI might think, “Oh, that means I can put most unsafe on most of my FFI declarations, since I’m not going to do anything advanced like call back to Haskell.”

Oh my friend, if only it were that simple!

Recall that when you create a thread in Haskell with forkIO, you’re not creating a real operating system thread; you’re creating a green thread that Haskell’s runtime system manages across its pool of operating system threads. This is usually very good: real threads are heavyweight, but Haskell threads are light and you can use a lot of them without paying too much. But here’s the rub:

The runtime system cannot preempt unsafe FFI calls!

In particular, when you invoke an unsafe FFI import, you effectively suspend everything else going on in the system: Haskell is not able to preempt it (in particular unsafe indicated that there was no need to save the state of the RTS), and the foreign code will keep running by itself until it finishes.

Don’t believe me? Try it out yourself (I conducted my tests on 6.12.1). You’ll need a few files:

/* cbit.c */

#include <stdio.h>

int bottom(int a) {

while (1) {printf("%d\n", a);sleep(1);}

return a;

}

/* cbit.h */

int bottom(int a);

And UnsafeFFITest.hs:

{-# LANGUAGE ForeignFunctionInterface #-}

import Foreign.C

import Control.Concurrent

main = do

forkIO $ do

safeBottom 1

return ()

yield

print "Pass (expected)"

forkIO $ do

unsafeBottom 2

return ()

yield

print "Pass (not expected)"

foreign import ccall "cbit.h bottom" safeBottom :: CInt -> IO CInt

foreign import ccall unsafe "cbit.h bottom" unsafeBottom :: CInt -> IO CInt

Compile and run the relevant files with:

gcc -c -o cbit.o cbit.c

ghc -threaded --make UnsafeFFITest.hs cbit.o

./UnsafeFFITest +RTS -N4

The output you see should be similar to this:

ezyang@javelin:~/Dev/haskell/unsafe-ffi$ ./UnsafeFFITest +RTS -N2

1

"Pass (expected)"

2

1

2

1

2

The first call played nice and let Haskell move along, but the second call didn’t. Some things to try for yourself include swapping the order of the forks, using forkOS (which many people, including myself, incorrectly assumed creates another operating system call) and changing the RTS option -N.

What does this mean? Essentially, only if you’re really sure Haskell will never have to preempt your C call (which I would not be comfortable saying except for the smallest, purest C functions), don’t use unsafe. It’s not worth it. Safety first!

Postscript. Thanks #haskell for helping me hash out this line of thought (I’d run into this behavior earlier, but it hadn’t occurred to me that it was bloggable.)

Postscript 2. Thanks to Simon Marlow for clarifying some mistakes that I made in my original treatment of the topic. If you’re interested in more details about the interaction of concurrency and the FFI, check out the paper he pointed to: Extending the Haskell Foreign Function Interface with Concurrency.

July 7, 2010Tapping away at a complex datastructure, I find myself facing a veritable wall of Babel.

“Zounds!” I exclaim, “The GHC gods have cursed me once again with a derived Show instance with no whitespace!” I mutter discontently to myself, and begin pairing up parentheses and brackets, scanning the sheet of text for some discernible feature that may tell me of the data I am looking for.

But then, a thought comes to me: “Show is specified to be a valid Haskell expression without whitespace. What if I parsed it and then pretty-printed the resulting AST?”

Four lines of code later (with the help of Language.Haskell)…

Ah, much better!

How to use it. In your shell:

cabal install groom

and in your program:

import Text.Groom

main = putStrLn . groom $ yourDataStructureHere

Update. Gleb writes in to mention ipprint which does essentially the same thing but also has a function for putStrLn . show and has some tweaked defaults including knowledge of your terminal size.

Update 2. Don mentions to me the pretty-show package by Iavor S. Diatchki which also does similar functionality, and comes with an executable that lets you prettify output offline!

July 5, 2010A short thought from standing in line at the World Expo: Little’s law is a remarkable result that relates the number of people in a queue, the arrival rate of people to the queue, and the time spent waiting in the queue. It seems that it could be easily applied to a most universal feature of theme parks: waiting queues. Instead of such unreliable methods as giving visitors tokens to test how long it takes to traverse some portion of the line and then eyeballing the wait time from there, it would be a simple matter to install two gates: one to count incoming visitors and one to count outgoing visitors, and with this data derive an instantaneous “wait time in queue” figure based on a smoothed running average of queue size and arrival rate. Added benefit for being electronic, which means you can easily beam it to information boards across the park!

It occurs to me that I don’t have any evidence that theme parks aren’t already using this technique, except maybe for the fact that their posted wait times are wildly inaccurate.

July 2, 2010Attention conservation notice. Purely functional programming demonstrates the same practices recommended by object-oriented MVC practice.

Model-View-Controller is a widely used object-oriented design pattern for organizing functionality in an application with a user interface. I first ran across it in my early days programming web applications. The Model/View separation made deep intuitive sense to me as a PHP programmer: without it, you’d end up with spaghetti templates with HTML print statements interleaved with MySQL queries. But Controller was always a little wishy-washy. What exactly did it do? It was some sort of “glue” code, the kind of stuff that bound together the Model and View and gave them orders. But this was always a sort of half-hearted answer for me (where should input validation go?), and soon I left the world of web applications, my questions unanswered.

Having been exposed to purely functional programming, I now believe that the controller and model/view separation is precisely the separation between side-effectful code (IO) and pure code.

The controller depends on the model and the view, but the model and view should not (directly) depend on the controller. Pure code and impure code don’t mix freely. In particular, you’re not allowed to reference impure code from pure code (unless you use unsafePerformIO). However, impure code can call pure code (although there may be some technical details involved), and the resulting code is impure. So, if the Controller is impure code and the Model/View is pure code, separating the two is simply making sure that if we have any code that is impure, we’ve extracted as much of the pure computation out of it as possible. Stated differently, if I have a function that reads and writes data, and there are lines in it that don’t have anything to do with IO, I should move them into their own function. Maybe those lines are the templating system, in which case it’s View; maybe those lines are running some complicated equation, in which case it’s Model. Pure/impure doesn’t capture the model/view distinction.

The controller receives input and initiates a response. So, the controller is input-output, i.e. IO.

The controller handles events that affect the model or the view. Pure code sort of lives in a vacuum: it can do computation, but it can’t do anything useful, since it can’t have any side effects and thus has no way for us to tell it what to compute, or to view the result of the computation. Impure code is the way we get anything done by handing off this information to our pure code.

There are several possible objections to this division. Here are a few of them:

Most object-oriented models are stateful, and state is not pure! There is a common misconception that state isn’t pure, possibly arising from the fact that both IO and State are monads. However, I can turn a state monad into a single, pure value by simply running the state machine: code that is stateful is monadic, but it is also pure, since it doesn’t have any external side effects. Shared state is a bit trickier, and usually not pure.

Controller code doesn’t have to be impure and here’s an example. Here I’ll indulge in a bit of prescriptivism: I bet you have a model, but one that is only tangentially related to your core business logic. If you have code that parses binary strings into message objects (but doesn’t actually handle transmitting or receiving those binary strings on the network), you have a mini-model of network messages. You should probably keep it separate from your real model, but for testability you should also keep it separate from your network code. Separation of concerns may be malleable, but the little IO in your type signature is always honest.

Some parting words about the purity hair-shirt: it is fairly widely acknowledged that busting out the MVC pattern makes your application more complex initially, and in a purely functional language, you’re forced to respect the distinction from the very beginning. Thus, writing small programs can be frustrating in a purely functional language because you don’t want to use the bulky but scalable engineering practice yet, and the language is making you do so from the very beginning. Haskell gives you a lot of rope to make it pleasant again, but it takes a while to get used to. On the plus side, when your program grows, this separation will continue to be enforced, and a messy refactoring may be averted.

June 30, 2010This part six of a six part introduction to c2hs. We finally talk about what ostensibly is the point of c2hs: calling C functions from Haskell. c2hs, due to its knowledge of the C headers, can already do the work for generating FFI imports. The call hook simply tells c2hs to generate the FFI import, while the fun hook generates another Haskell function which performs marshalling.

Call. The format of call is quite simple, because like get and set, it is meant to be interleaved with other Haskell code. If I would like to invoke the readline function from readline/readline.h, a {#call readline #} would suffice; c2hs will then generate the FFI import with the correct signature and transform the call directive into the name of the FFI import.

Of course, readline doesn’t call back to Haskell, so we could add unsafe: {#call unsafe readline #}. And if you’re sure that the C function has no side-effects, you can add pure: {#call pure unsafe sin #}. If you have multiple calls to the same function using the same FFI declaration, their flags need to be consistent.

By default, the cid will be use precisely to determine the name of the FFI import; if it is not a valid Haskell identifier for a function (i.e. is capitalized) or the C function name would conflict with another, you’ll need to specify what the FFI will import as. Common conventions include prefixing the function with c_, or you can use ^ for c2hs’s capitalization conversion. {#call FooBar_Baz as ^ #} will convert to fooBarBaz (with an appropriate FFI declaration).

Fun. Because the signature of the FFI declarations will all be C types, and Haskell programs tend not to use those, and because it is a frequent operation to convert to and from the C types, there’s a little bit of automation to help you out with the fun directive. Unlike call, it’s intended to standalone as a definition, and not be embedded in code. Note that you don’t have to use fun; gtk2hs doesn’t use it, for example. However, many people find it useful.

A fun starts off much like a call: you first specify if it’s pure and/or unsafe, specify the C identifier, and the specify the Haskell name. Since the majority of your code will refer to the Haskell name, it’s usually best to specify ^ for a consistent naming convention.

From here, we need to specify what the end type of the desired Haskell function is, and how to go from those types to the C types (the marshalling functions). The c2hs tutorial has a bit to say on this topic, so we’ll take a more example oriented approach.

Primitive C types. The integral, floating point and boolean (usually an integer under the hood) primitive C types are so prevalent that c2hs will automatically use the cIntConv, cFloatConv and cFromBool/cToBool functions to marshal them if none are specified. These functions work in both directions. This directive:

{#fun pure sinf as ^

{ `Float' } -> `Float' #}

generates:

sinf :: Float -> Float

sinf a1 =

let {a1' = cFloatConv a1} in

let {res = sinf'_ a1'} in

let {res' = cFloatConv res} in

(res')

You can see that a bunch of (ugly) generated code is added to run the marshalling function on the argument, pass it to the FFI, and then another marshalling function is called on the result. Idiomatic Haskell might look like:

sinf = cFloatConv . sinf'_ . cFloatConv

If you’d like to use a different name for the marshalling function, you can specify it before the type of an argument (an “in” marshaller), or after the result (an “out” marshaller), as such:

{#fun pure sinf as ^

{ myFloatConv `Float` } -> `Float` myFloatConv

and you can just replace the relevant function calls in the generated Haskell.

String arguments. Strings also hold a special place in c2hs’s heart; null-terminated and strings needing explicit length information specified are handled with ease. Consider these two function prototypes:

void print_null_str(char *str);

void print_explicit_str(char *str, int length);

We can write the following c2hs directives:

{#fun print_null_str as ^ { `String' } -> `()' }

{#fun print_explicit_str as ^ { `String'& } -> `()' }

and they will be automatically be marshalled with withCString* and withCStringLen*.

There are several interesting things happening here. We represent a void return type using () (the empty type in Haskell). Additionally, the String parameter in print_explicit_str has an ampersand affixed to it; this means that the marshaller should produce a tuple of arguments which will be passed to the function as two separate arguments. Sure enough, withCStringLen results in a (Ptr CChar, Int), and c2hs use a slight variant withCStringLenIntConv which converts the Int into a CInt. (Note that if you need more complicated multi-argument ordering, fun is not for you.)

But perhaps the most interesting thing is the * affixed to the input marshaller, which has two effects. The first is to indicate that the input marshalling function is the IO monad, for example, the type of withCString is String -> (CString -> IO a) -> IO a. But furthermore, it indicates a function that follows the bracketed resource pattern “with”. We did not useString -> CString, since this could result in a memory leak if we don’t free the CString later! The code generated is then:

printNullStr :: String -> IO ()

printNullStr a1 =

withCString a1 $ \a1' ->

printNullStr'_ a1' >>= \res ->

return ()

printExplicitStr :: String -> IO ()

printExplicitStr a1 =

withCStringLenIntConv a1 $ \(a1'1, a1'2) ->

printExplicitStr'_ a1'1 a1'2 >>= \res ->

return ()

which makes use of hanging lambdas to keep the layout consistent.

Marshalling struct arguments. While the c2hs documentation claims that there is a default marshaller if you have the following situation in C:

struct my_struct { int b; int c; };

void frob_struct(struct my_struct *);

and in Haskell:

data MyStruct = MyStruct Int Int

instance Storable MyStruct where ...

{#pointer *my_struct as MyStructPtr -> MyStruct #}

So you should be able to write:

{#fun frob_struct as ^ { `MyStruct' } -> `()' #}

Where, the input marshaller is with*. Unfortunately, I could never get that to work; furthermore, c2hs thinks that with is a reserved word, so you’ll need to rename it in order to use it. :

withT = with

{#fun copy_struct as ^ { withT* `MyStruct' } -> `()' #}

Opaque pointer arguments. When you don’t want to perform any tomfoolery on a pointer when in Haskell, you can simply specify that the pointer is the argument and use id as the marshaller. In the previous example, copy_struct could have alternately been defined as:

{#fun copy_struct as ^ { id `MyStructPtr' } -> `()' #}

A convention is to omit Ptr from the name of the pointer type if you are only dealing with opaque pointers.

Out marshalling input arguments. A frequent pattern in C code is using pointer arguments to permit a function to return multiple results. For example, strtol has the following signature:

long int strtol(const char *nptr, char **endptr, int base);

endptr points to a pointer which will get set to the pointer at the end of the portion of the string in nptr we parsed. If we don’t care about it, we can set endptr = NULL.

Obviously, we don’t want our Haskell function to do this, and we have much easier ways of returning multiple results with tuples, so c2hs has a notion of an outmarshaller for an input argument. It also has the notion of a “fake” input argument which the user doesn’t have to pass, in case our function is completely responsible for allocating the memory the pointer we pass to the function points to.

Here’s a first attempt at writing a fun hook for strtol:

{#fun strtol as ^ {id `Ptr CChar', id `Ptr (Ptr CChar)', `Int'} -> `Int` #}

We’ve eschewed the default string marshalling because otherwise endptr won’t give us very interesting information. This version is a carbon copy of the original.

To improve this, we consider Ptr (Ptr CChar) to be a way of returning Ptr CChar. So, after the function is run, we should peek (dereference the pointer) and return the result:

{#fun strtol as ^ {id `Ptr CChar', withT* `Ptr CChar' peek*, `Int'} -> `Int' #}

peek is in IO, so it needs the asterisk, but for out marshallers it doesn’t result in any fancy bracketing usage. Now, the Haskell return type of this function is not Int; it’s (Int, Ptr CChar). :

strtol :: Ptr CChar -> Ptr CChar -> Int -> IO (Int, Ptr CChar)

strtol a1 a2 a3 =

let {a1' = id a1} in

withT a2 $ \a2' ->

let {a3' = cIntConv a3} in

strtol'_ a1' a2' a3' >>= \res ->

peek a2'>>= \a2'' ->

let {res' = cIntConv res} in

return (res', a2'')

Since we’re overwriting the original contents of the pointer, it doesn’t make much since to force the user of our function to pass it to us. We can suffix our input marshaller with - to indicate that it’s not a real Haskell argument, and use alloca instead:

{#fun strtol as ^ {id `Ptr CChar', alloca- `Ptr CChar' peek*, `Int'} -> `Int' #}

Notice that we got rid of the *; it’s one or the other. Now we have a usable function:

strtol :: Ptr CChar -> Int -> IO (Int, Ptr CChar)

strtol a1 a3 =

let {a1' = id a1} in

alloca $ \a2' ->

let {a3' = cIntConv a3} in

strtol'_ a1' a2' a3' >>= \res ->

peek a2'>>= \a2'' ->

let {res' = cIntConv res} in

return (res', a2'')

or, in idiomatic Haskell:

strtol nptr base = alloca $ \endptr -> do

result <- strtol'_ nptr endptr (cIntconv base)

end <- peek endptr

return (result, end)

Error handling. There is one last piece of functionality that we haven’t discussed, which is the - flag on an out marshaller, which causes Haskell to ignore the result. By itself it’s not ordinarily useful, but when combined with * (which indicates the action is in IO), it can be used to attach functions that check for error conditions and throw an exception if that is the case. Recall that the default output marshaller for () is void-, ignoring the output result of a function.

June 28, 2010This one’s for the MIT crowd.

I will unfortunately not be in Boston over IAP, so I won’t be able to do a redux of the class I taught last year, Advanced Typeclasses in Haskell. However, since I will be in Boston for September, it might be a good time to do cluedump for SIPB this year. I love talking about Haskell, and so I could do another talk in a similar vein (maybe something that covers rank-2 types and existential quantification?) I’ve also been thinking that doing an architectural overview of Scripts would also be good.

What would you like to see me talk about?

June 25, 2010This part five of a six part introduction to c2hs. Today, we explain how to marshal data to and from C structures.

An important note. There is a difference between struct foo and foo; c2hs only considers the latter a type, so you may need to add some typedefs of the form typedef struct foo foo in order to get c2hs to recognize these structs.

Get. The Haskell FFI has no knowledge of C structs; Haskell’s idea of reading a member of a struct is to peek at some byte offset of a memory location, which you calculated manually. This is horrid, and hsc2hs has #peek to relieve you of this non-portable drudgery. c2hs has something even simpler: you can specify {#get StructName->struct_field #} and c2hs will replace this with a lambda that does the correct peek with the correct type: (\ptr -> do {peekByteOff ptr 12 ::IO CInt}) (in the IO monad!) Note the following gotchas:

- You will need to manually convert the resulting primitive C type into a more friendly Haskell type, and

- The left hand side of the expression is a type or a struct name, not the Haskell variable containing the pointer/struct you want to peek at. That will usually go to the right of the lambda.

The get directive is actually more general than just struct access: it can dereference pointers (*StructName) or access a member without dereferencing (StructName.struct_field).

Set. The opposite of get, set lets you poke values into arbitrary memory locations. Unlike get, the value passed in is required to be a pointer (and the syntax uses periods). {#set StructName.struct_field #} expands to (\ptr val -> do {pokeByteOff ptr 12 (val::CInt)}); the pointer is the first argument and the value is the second. You also need to marshal the input value manually.

Defining Storable. If you’re not individually getting and setting fields in the struct in an opaque pointer, creating a Storable instance is a good thing to do. However, since all of the lambdas that get and set create are in the IO monad, composing them can be slightly tricky. Judicious use of monadic lifting and applicative instances can make the code a lot simpler, however:

data StructName = StructName

{ struct_field1'StructName :: Int

, struct_field2'StructName :: Int

}

instance Storable StructName where

sizeOf _ = {#sizeof StructName #}

alignment _ = 4

peek p = StructName

<$> liftM fromIntegral ({#get StructName->struct_field1 #} p)

<*> liftM fromIntegral ({#get StructName->struct_field2 #} p)

poke p x = do

{#set StructName.struct_field1 #} p (fromIntegral $ struct_field1'StructName x)

{#set StructName.struct_field2 #} p (fromIntegral $ struct_field2'StructName x)

The odd naming convention in StructName is to account for the fact that different structures can share field names, while Haskell field names may not.

Note. c2hs recently got support added for an alignment directive, which computes the alignment for a C datastructure. Unfortunately, as of 0.6.12, this has not yet been released to the general public.

Request. The paper describing c2hs states the following: “[Marshaling of compound C values to Haskell values] is more generally useful; however, often we do not really want to marshal entire C structures to Haskell.” Unfortunately, current incarnations of c2hs do not offer any optional functionality to reduce the drudgery of writing the “straightforward” Storable instance, which would be absolutely lovely. bindings-dsl and GreenCard appear to fare better in this respect.

Next time. Call and fun: marshalling redux

June 23, 2010This is part four of a six part tutorial series on c2hs. Today we discuss the simple things in c2hs, namely the type, enum, pointer, import and context directives.

Prior art. All of the directives c2hs supports are tersely described in the “tutorial” page (which would perhaps be more accurately described as a “reference manual”, not tutorial.) There is also (paradoxically) a much more informal introduction for most of the directives in c2hs’s research paper.

Type. C code will occasionally contain macro conditionals redefining a type depending on some build condition (the following is real code):

#if defined(__ccdoc__)

typedef platform_dependent_type ABC_PTRUINT_T;

#elif defined(LIN64)

typedef unsigned long ABC_PTRUINT_T;

#elif defined(NT64)

typedef unsigned long long ABC_PTRUINT_T;

#elif defined(NT) || defined(LIN) || defined(WIN32)

typedef unsigned int ABC_PTRUINT_T;

#else

#error unknown platform

#endif /* defined(PLATFORM) */

If you wanted to refer to write FFI code that referenced functions that used ABC_PTRUINT_T, you might have to have performed some guess on what the value truly is in Haskell or used the C preprocessor to reimplement the conditions. With c2hs you can retrieve the true value of a typedef with type:

type ABC_PTRUINT_T = {#type ABC_PTRUINT_T #}

Consider the case of a 64-bit Linux system (such that __ccdoc__ is undefined and LIN64 is defined), then the result is:

type ABC_PTRUINT_T = CLong

Enum. Enums show up frequently in well-written (i.e. eschewing magic numbers) C code:

enum Abc_VerbLevel

{

ABC_PROMPT = -2,

ABC_ERROR = -1,

ABC_WARNING = 0,

ABC_STANDARD = 1,

ABC_VERBOSE = 2

};

However, underneath the hood, these are really just ints, so Haskell code that wants to pass an enum value to a function has to:

- Create a new datatype to represent the enumeration, and

- Write a function that maps from that datatype to the C integer and back again for the

Enum instance.

We can have c2hs do all the work for us:

{#enum Abc_VerbLevel {underscoreToCase} deriving (Show, Eq) #}

which becomes:

data Abc_VerbLevel = AbcPrompt | AbcError | AbcWarning | AbcStandard | AbcVerbose

deriving (Show, Eq)

instance Enum Abc_VerbLevel

fromEnum AbcPrompt = -2

-- ...

Note that, as ABC_PROMPT is a very unsightly constructor in Haskell, we transform the names using the underscoreToCase algorithm as mentioned before. You can also explicitly list out the renamings:

{#enum Abc_VerbLevel {AbcPrompt, AbcError, AbcWarning, AbcStandard, AbcVerbose} #}

or change the name of the datatype:

{#enum Abc_VerbLevel as AbcVerbLevel {underscoreToCase} #}

There are two other transforms (which can combine with underscoreToCase: upcaseFirstLetter and downcaseFirstLetter, though I’m not sure when the latter would result in working Haskell code.

Pointer. Unlike C primitives, which are specified in Foreign.C.Types, Haskell needs to be told how to map pointer types (foo*) into Haskell types. Consider some struct:

struct foobar {

int foo;

int bar;

}

It is quite conceivable that there exists a data Foobar = Foobar Int Int in the Haskell codebase, in which case we would like Ptr Foobar to represent a struct foobar* in the original C code. c2hs has no way of deriving this information directly, so we give it this information:

{#pointer *foobar as FoobarPtr -> Foobar #}

This generates the code:

type FoobarPtr = Ptr Foobar

But more importantly, allows c2hs to place more specific types in the signatures it writes for FFI bindings (which we will see in the next post in the series.)

Some variations on the theme:

If you want to represent an opaque pointer whose contents will not be marshalled, you can either do empty data declarations:

data Foobar

{#pointer *foobar as FoobarPtr -> Foobar #}

or you can have c2hs generate code using the newtype trick:

{#pointer *foobar as FoobarPtr newtype #}

I prefer empty data declarations, since there’s no need to wrap and unwrap a newtype in that case: the newtype will generate:

newtype FoobarPtr = FoobarPtr (Ptr FoobarPtr)

which, for any code expecting Ptr a, needs to be unwrapped.

If you do not care for the name FoobarPtr and would just like to explicitly say Ptr Foobar, you can tell c2hs not to emit the type definition with nocode:

{#pointer *foobar -> Foobar nocode #}

If no Haskell name mapping is specified, it will simply use the C name:

-- if it was struct Foobar...

{#pointer *Foobar #}

If you would like to refer to a typedef in C which is already a pointer, just omit the asterisk:

typedef struct Foobar* FoobarPtr

{#pointer FoobarPtr #}

c2hs also has limited support for declaring pointers as foreign or stable, and generating code accordingly. I’ve not used this, except in one case where I found the generated bindings for the pointer were not flexible enough. Your mileage may vary.

Import. A C library that contains multiple header files will probably have some headers including others to get vital type definitions. If you organize your Haskell modules similarly, you need to mimic these includes: this can be done with import. :

{#import Foobar.Internal.Common #}

In particular, this sets up the pointer mappings from the other module, as well as generating the usual import statement.

Context (optional). Context has two purported purposes. The first is to specify what library the FFI declarations in the file should be linked against; however, in Cabal, this doesn’t actually do anything—so you need to still add the library to Extra-libraries. The second is to save you keystrokes by adding an implicit prefix to every C identifier you reference, in the case that the original C code was namespaced gtk_ or similarly. I personally like not needing to have to qualify my imports to the lower level API and like the visual distinction of C prefixes, so I tend to omit this. Some directives let you change the prefix locally, in particular enum.

Next time. Marshalling with get and set.