Correctness is overrated. After all, no one knows what it means for any reasonably complicated system to be “correct”, and even when we do, the mileposts move around on a daily basis. With the raison d’être of formal verification stripped away, can we still consider it a worthy goal?

Perhaps verification results in higher quality code. But this is not obviously true: correctness is not quality. We might hope that high quality code is readable and easily understood, that it should be as self-contained and independent from the rest of the system, that it is efficient and economical. There is no a priori reason to believe that verification would grant us any of these properties. No matter how horrible some code is, as long as it is correct, there exists a proof which vouches for its correctness.

Read more...

“…so that’s what we’re going to build!”

“Cool! What language are you going to write it in?”

“Well, we were thinking we were going to need three programming languages…”

“…three?”

“…and they’ll be research programming languages too…”

“Are you out of your mind?”

This was the conversation in streaming through my head when I decided that I would be writing my latest software project in Coq, Haskell and Ur/Web. I had reasonably good reasons for the choice: I wanted Coq because I didn’t actually want to implement a theorem prover from scratch, I wanted Ur/Web because I didn’t actually want to hand write JavaScript to get an AJAX interface, and I wanted Haskell because I didn’t want to write a bucket of C to get Ur/Web and Coq to talk to each other. But taken altogether the whole thing seemed a bit ludicrous, like an unholy fusion of a trinity of research programming languages.

Read more...

While working on my senior thesis, I had to write a prior work section, which ended up being a minisurvey for the particular subfield my topic was in. In the process, a little bird told me some things…

- If you can, ask someone who might know a little bit about the subject to give you the rundown: there’s a lot of knowledge in people’s heads which never got written down. But also be aware that they will probably have their blind spots.

- It is better to do the literature review later rather than earlier, after you have started digging into the topic. I have been told if you read the literature too early, you will get spoiled and stop thinking novel thoughts. But I also think there is also a little bit of “you’ll understand the literature better” if you’ve already thought about the topic on your own. Plus, it’s easy to think that everything has been done before: it’s simply not true! (But if you think this, you will get needlessly discouraged.)

- Don’t indiscriminately add papers to your database. You should have something you want to do with it: is it an important paper that you have to cite because everyone knows about it? Is it directly addressing the issue you’re dealing with? Does it happen to be particularly well written? Is it something that you could see yourself reading more carefully later? Don’t be afraid to toss the paper out; if it actually was important, you’ll run into it again later.

- Every researcher is a historian. When you look at a paper, you’re not just looking at what is written inside it, but its social context. There’s a reason why “prior work” is so important to academics. If you don’t understand a paper’s context, it’s unlikely you’ll understand the paper.

- Researchers don’t necessarily talk to each other. Pay attention to who they cite; it says a little bit about what community they’re in.

- Researchers are happy to send you copies of papers they have written (so fear not the paywall that your university hasn’t subscribed to). They may even volunteer extra information which may come in handy.

- Be methodical. You’re doing a search, and this means carefully noting down which papers you skimmed, and what you got out of them, and keeping track of other veins of research that you need to follow up on. It’s like chasing a rabbit down a hole, but if you have some clearly defined search criteria, eventually you’ll bottom out. You can prune the uninteresting papers later; the point here is to avoid duplicating work.

- Read papers critically. Not everything that is published is good; that’s the point of research!

What are your favorite maxims to keep in mind while you’re surveying the literature?

Read more...

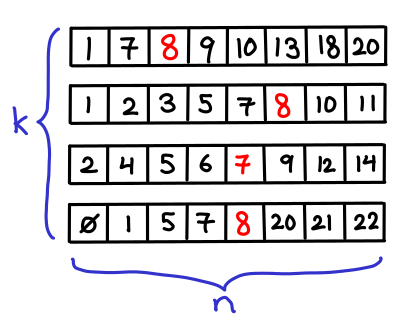

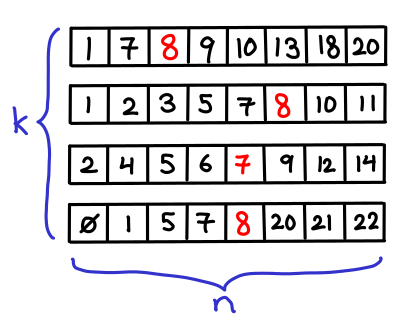

Suppose that you have k sorted arrays, each of size n. You would like to search for single element in each of the k arrays (or its predecessor, if it doesn’t exist).

Obviously you can binary search each array individually, resulting in a $O(k\lg n)$ runtime. But we might think we can do better that: after all, we’re doing the same search k times, and maybe we can “reuse” the results of the first search for later searches.

Read more...

Range trees are a data structure which lets you efficiently query a set of points and figure out what points are in some bounding box. They do so by maintaining nested trees: the first level is sorted on the x-coordinate, the second level on the y-coordinate, and so forth. Unfortunately, due to their fractal nature, range trees a bit hard to visualize. (In the higher dimensional case, this is definitely a “Yo dawg, I heard you liked trees, so I put a tree in your tree in your tree in your…”) But we’re going to attempt to visualize them anyway, by taking advantage of the fact that a sorted list is basically the same thing as a balanced binary search tree. (We’ll also limit ourselves to two-dimensional case for sanity’s sake.) I’ll also describe a nice algorithm for building range trees.

Read more...

The You could have invented… article follows a particular scheme:

- Introduce an easy to understand problem,

- Attempt to solve the problem, but get stuck doing it the “obvious” way,

- Introduce an easy to understand insight,

- Methodically work out the rest of the details, arriving at the final result.

Why does framing the problem this way help?

- While the details involved in step 4 result in a structure which is not necessarily obvious (thus giving the impression that the concept is hard to understand), the insight is very easy to understand and the rest is just “monkey-work”. The method of deriving the solution is more compressible than the solution itself, so it is easier to learn.

- Picking a very specific, easy-to-understand problem helps ground us in a concrete example, whereas the resulting structure might be too general to get a good intuition off of.

It’s very important that the problem is easy to understand, and the process of “working out the details” is simple. Otherwise, the presentation feels contrived. This method is also inappropriate when the audience is in fact smart enough to just look at the end-result and understand on an intuitive level what is going on. Usually, this is because they have already seen the examples. But for the rest of us, it is a remarkably effective method of pedagogy.

Read more...

Abstract. Proof-carrying code can be used to implement a digital-rights management scheme, in the form of a proof-verifying CPU. We describe how this scheme would work and argue that DRM implemented this way is both desirable and superior to trusted (“treacherous”) computing schemes. This scheme permits users to retain control over their own machines, while allowing for specific limitations on software capabilities. The ability to impose these limitations will become especially important when 3D printers and biosynthesis machines become ubiquitous. This essay assumes some technical knowledge, although no background in formal methods is required. (If you know how proof-carrying code works, go away; this essay is not for you.)

Read more...

POPL January 28, 2012

Last night, I returned from my very first POPL, very exhausted, and very satisfied. It was great putting faces to names, chatting with potential PhD supervisors (both from the US and in the UK), and reveling in the atmosphere.

Highlights from my files:

- Tony Hoare, on being awarded the ACM SIGPLAN Programming Languages Achievement Award, even though he has received so many other awards, “…it makes me feel a little guilty. I didn’t ask for it!”

- Hoare: “I like mistakes; if I find it’s my fault, I can rectify it. If it’s someone else’s fault, there’s not much you can do.”

- Hoare: “Simplicity is not an engineering goal.”

- Tony had just described how he retracted an accepted paper because the reasoning on it was intricate, and he didn’t think it presented program proving in a good light. The interviewer complimented him for his principles, saying that if he had a paper accepted which he didn’t think was up to snuff, he probably wouldn’t have the courage to retract it. It was “so brave.” To which Tony replies, “Well, I wish you could!” [laughter] “Unfortunately, the pressure to publish has increased. We feel obliged to publish every year, and the quality of the average paper is not improved.”

- One recurring theme: Tony Hoare mentioned that proofs and tests were not rivals, really they were just the same thing… just different. In the “Run your Research” talk, these theme came up again, where this time the emphasis was executable papers (the “run” in “Run your Research”).

- “Don’t just support local reasoning, demand it!” (Brute force proofs not allowed!)

- On JavaScript: “null is sort of object-y, while undefined is sort of primitive-y.”

- The next set come from the invited speaker from the computer networks community. “During 9/11, on average, the Internet was more stable. The reason for this was the NetOps went home.” (on the causes of network outages in practice.)

- “Cisco routers have twenty million lines of code: there’s actually an Ada interpreter in there, as well as a Lisp interpreter.”

- “It used to be acceptable to go down for 100ms… now we have video games.”

- Speaker: “I am the walrus.” (Goo goo g’ joob.)

- “I believe we do not reuse theorems. We reuse proof methods, but not the actual theorems. When we write papers, we create very shallow models, and we don’t build on previous work. It’s OK. It’s the design. It doesn’t matter too much. The SML standard was distributed with a bug report, with 100+ mistakes in the original definition. Doesn’t detract from its impact.”

- “Put on the algebraic goggles.”

- “The Navy couldn’t install it [a system for detecting when classified words were being transmitted on insecure channels], because doing so would be admitting there was a mistake.”

- “Move to something really modern, like Scheme!” (It’s like investing one trillion, and moving from the thirteenth century to the fourteenth.)

- Strother Moore (co-creator of ACL2): “After retirement, I’ll work more on ACL2, and then I’ll die.”

- “What’s the best way to make sure your C program is conforming?” “Write deterministic code.” [laughter]

Do you remember your first computer program? When you had finished writing it, what was the first thing you did? You did the simplest possible test: you ran it.

As programs increase in size, so do the amount of possible tests. It’s worth considering which tests we actually end up running: imagine the children’s game Battleship, where the ocean is the space of all possible program executions, the battleships are the bugs that you are looking for, and each individual missile you fire is a test you run (white if the test passes, red if the test fails.) You don’t have infinite missiles, so you have to decide where you are going to send them.

Read more...